11 years of amateur sysadmin

Back in 2013, I started renting my first server. It was from Kimsufi, a service from OVH, which was super cheap compared to most other options around (and still is). I wanted to emancipate myself from big tech companies, and like most people, I had a GMail account. The first goal at the time of this server was, apart from hosting my website, to host my own mail service for me and a few friends. That did not go as planned, but I still have a server, and I have done lots of things. Lately, I migrated to a VPS, and though it was a good time to reflect on those years.

First, I'll make an overview of the solutions I approached across the years, then I'll talk about some of the services I use.

The goal is not to detail how things work, or to be a tutorial, so I'll try to make it understandable and synthetic. It's an overview, in a kind of "post-mortem" vibe more than anything else.

Evolution of solutions

Everything everywhere all at once

I've always used Debian Linux for headless purposes, so the choice was only natural, and I never changed ever since.

Running everything on the host without any encapsulation can work well, especially if the apps are themselves self-contained. However, each time a new service is needed, it can enter into conflict with the other ones. Even to just try something quickly, it could take a bit of time. Each time, a new nginx configuration was needed, written by hand. And having a server is like induced demand: you just want to self-host anything and try out everything. So these services accumulate over time, you have to maintain them, and everything is done by hand. They all just run on the host system, and it's so easy to ruin your system if there's an issue... A lot of apps want to run on the same ports, it's just not a great way to run multiple services at once. Enter the concept of encapsulation.

First use of encapsulation: LXC

That's where LXC comes to play. I think it was around 2018 when I migrated everything to a new, more spacious server (still a Kimsufi) and scripted everything to automate as much as I could with LXC. The basics of LXC is a virtualization using the kernel capabilities. You use an image of an operating system, and put your app inside of it. That way, it is isolated, and it resolves a few of the issues I mentioned. However, with LXC, there's still a lot to do by hand or external tool: you have to configure and manage the apps yourself (like copying the files to the virtualized OS and updating them), create scripts to automate, etc. It's still a lot of work, just encapsulated! And since they are encapsulated, you have to manage the network with iptable, and have a reverse proxy to access your apps through the network. It is, in fact, a lot of hassle for anything. And there's a solution to those new issues, that I started using when the world paused in 2020: Docker.

The Docker revelation

In fact, it was a bit silly to use LXC when Docker already existed and was all that and much more. It answered all the mentioned issues. Well, at least it helped me understand the concepts more easily (initially, Docker was based upon LXC).

Like LXC, Docker is a system of encapsulation, running another OS on the host. What's more, there are images of a lot of apps on Docker Hub, already purpose-built, most of the time by the maintainers themselves. I do everything with Docker Compose, where my configuration is in a YAML file (you can also run Docker directly in the terminal with arguments, but that's mainly for other purposes). Here's an example of a configuration file to run a simple website with nginx:

services:

website:

image: nginx

environment:

NGINX_HOST: host.com

NGINX_PORT: 80

volumes:

- ./data:/usr/share/nginx/html

restart: always

container_name: website1

deploy:

resources:

limits:

memory: 10MB

As you can see with this example, a lot of things are done quite easily:

- getting an image of nginx running on an independent OS, in a contained environment;

- making it accessible on

host.com; - making it run on the port 80;

- assigning local data to the encapsulated container (that's the volumes part);

- limiting its memory resources.

With more complex services, you'll have more configuration, but the basics will be the same. With one command, you can pull a new version (docker compose pull), and run it (docker compose up -d). That's mostly what I do to keep up to date. I can't think of any simpler way!

That's just a very light overview of Docker. But there's a lot you can do, and here are just a few examples: you can make your own images (that's what I did for my Japanese project), you can run a reverse proxy to easily serve your websites (more on that later in this post), you can run any app you want in a contained environment for testing purposes (not only web apps: just anything with any OS you want). It's a great tool, with lots of option, and still easy for all kinds of usages.

From dedicated servers to VPS

I quickly talked about this on my post about my blog migration. The aging CPU and hard drive of the dedicated server was becoming an issue for performance, notably for Ghost, a blog CMS. VPS have powerful CPUs, run usually on fast SSDs, but can be quite limited on available resources. What I sacrificed is disk space, going from 2TB to 80GB. This led me to optimize the data I have on my server. What was taking most space were my photos: I reduced the size with barely visible quality loss for a ~80% size reduction, and I think it'll be a long time before I need more space. One of the advantages of a VPS, it's that it is more easily upgradable: for instance, there's an option to increase storage if needed.

All in all, I wouldn't say that it is better than a dedicated server, but for a similar price, I have a significantly more powerful CPU and a M2 SSD, with much less storage. It's a compromise I'm willing to make! But different situations call for different solutions.

Services

Mails

Managing a mail server turned out to be a nightmare! Mails are super complicated, and no solution was easy to implement. Managing multi-domains, making sure the main providers (Microsoft, Google) accept your server, handling spams... It is just too much work, and I gave up after a few months of trial and mostly error. I was trying with individual packages as well as all-in-one solutions like iRedMail.

Even today, I wouldn't want to run a mail service. It's quite critical to be able to receive messages, so I prefer to put this task on other people. I first used ProtonMail, however I got tired of not being able to use third-party apps. Especially since most of my contacts would not use encryption on their end, it felt quite useless to have these limitations for no real gain. For that last few years, I have been using mailbox.org with a custom domain, which is quite cheap and super configurable.

Website

I have a few straightforward websites running on my server. Of course, my main website, but also a few projects. Those are served through a basic nginx container. This has always been an easy process, but with Docker, there's almost nothing I have to configure now.

nginx

Today, the backbone of my server is the nginx-proxy and acme-companion containers. All my containers are accessible on the internet thanks to nginx-proxy: the nginx configuration is automatically created by this container.

Thanks to acme-companion, SSL encryption is automatically generated and renewed. Prior to that, I had to renew manually every three month, which I could forget and make everything inaccessible...

Thanks to these two containers, I can expose any container super easily, by just adding these lines:

website:

environment:

VIRTUAL_HOST: service.host.com

LETSENCRYPT_HOST: service.host.com

networks:

default:

external: true

name: nginx-proxy

Git

Being a student in computer science, I started using git regularly, for all sorts of projects. Of course GitHub existed, but I liked the idea of having my own git server, where I could do what I wanted without any limitations. GitLab was always too resource heavy for my servers. Gogs was a superb alternative: extremely lightweight with a nice interface. Gogs has now been forked into the much more popular Gitea, that I started using when it got up to speed.

Blogging systems

That was the topic of a previous blog post :)

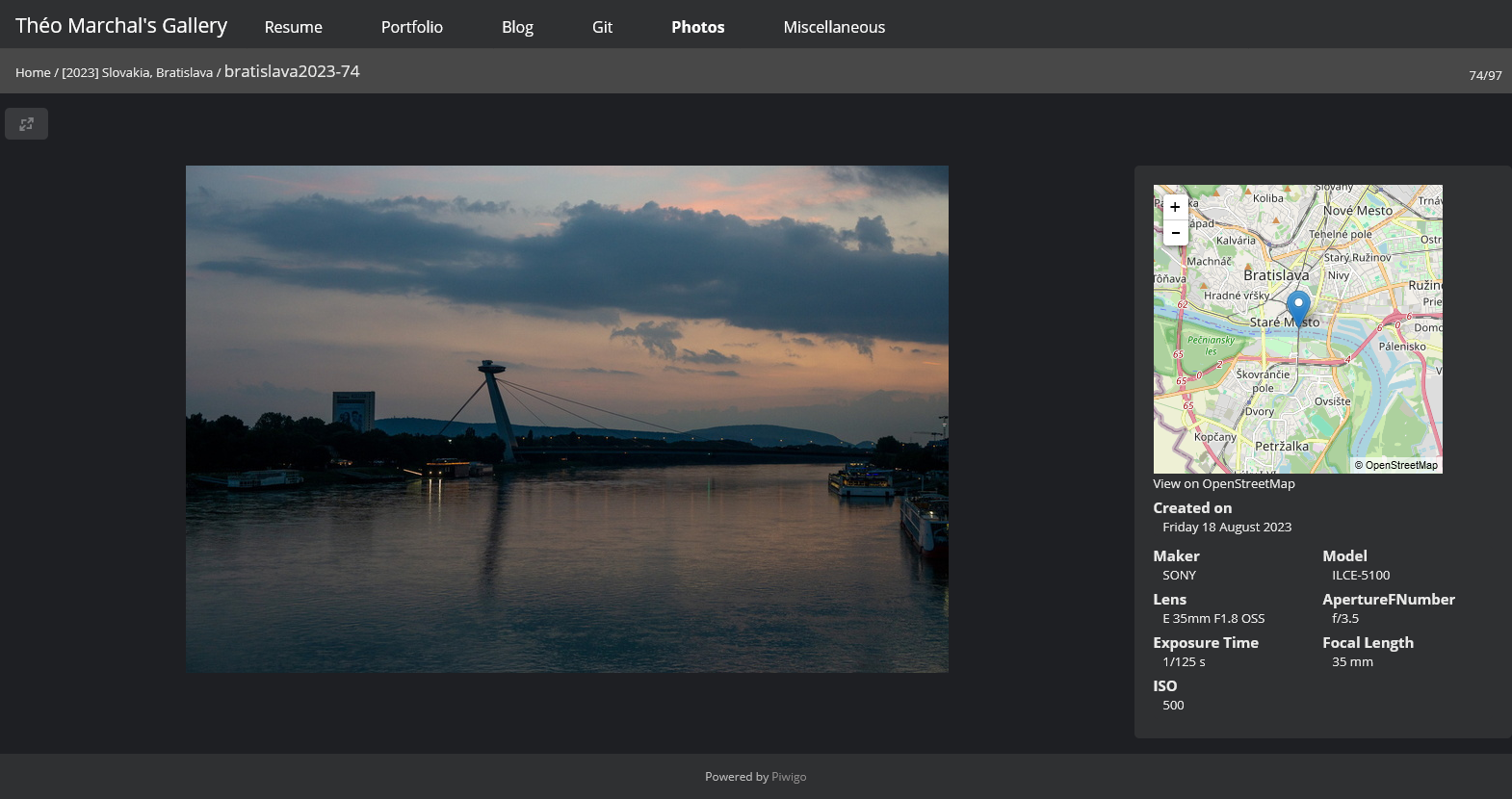

Piwigo

Piwigo is a gallery management system, with the goal of sharing photos with others. I wouldn't say I'm a huge fan of it, but to this date, I haven't found better.

There's a lot to love with Piwigo: it has lots of plugins, and it is quite configurable... but its age is showing. Plugins are never really great, can be outdated or have features not working for years, and everything feels a bit clunky and non-intuitive. I don't want to sound harsh: open source projects are valuable, and I'm happy that this project exists, especially since I haven't found a suitable alternative. It's just that it is a bit frustrating. OpenStreetMap integration does not entirely work, the design has not been made with mobile in mind, so customization is hacky. It's the little things, but with enough time, it can be made into something nice, albeit with frustration in the process.

My photos are available through Piwigo here.

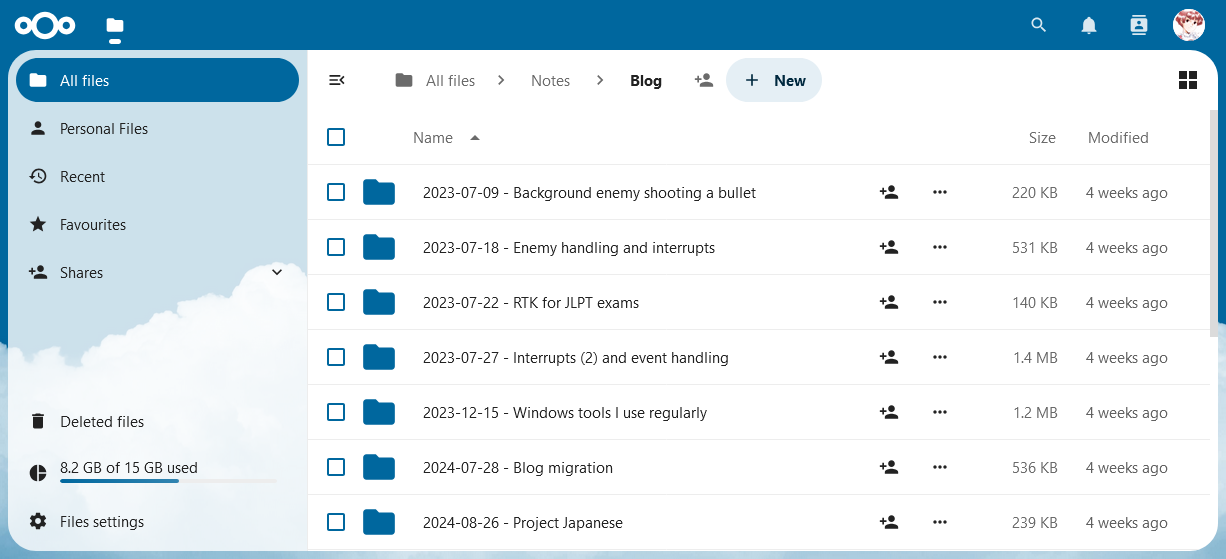

Nextcloud

Nextcloud is... a pretty mixed bag. It is a private cloud system, with user management and a lot of plugins. At first, I liked its concept so much that I wanted to use as much of its capabilities as possible. Cloud, calendars, contacts, RSS, bookmarks... I did that for a few years, but as time passed, I couldn't rely on it. The bookmarks' plugin is really not great, and the RSS one has been broken for months. Nextcloud is managed by an enterprise, and as much as I believe that this can be a great way for open-source software to have funds and be great... well, I'm not convinced by Nextcloud. There are too many things that are official, but supported by only one person, and are not up-to-date. For that reason, I removed the features I use within Nextcloud, little by little. This year was the "nail in the coffin", as I only use it as a cloud server to access my data, and nothing else.

Migrating to a VPS with an SSD has made Nextcloud considerably more responsive, especially the mobile app that was literally not working with the slower hard drive. When writing this article, the Windows synchronization app is not working correctly, its menu not showing up, following an update. It is that kind of little things that are extremely frustrating, because they are regular, and when you need to access critical data, you want reliability and consistency. Sadly, after years of using Nextcloud, I cannot say that it offers that. Updates can easily break core features. I've been thinking about getting rid of it entirely for a few years now. Its promise is awesome, but it's definitely not delivering, and that is pretty disappointing.

Baikal

Trying to get away from Nextcloud, I decided to stop using its Calendar and Contacts capabilities. For example, one frustrating thing that never worked was for the local Nextcloud users to not show in my contacts. Hours of configuration and help from the forums, but I never found a working solution for my instance.

I decided to run a Baikal instance. It is a CalDAV/CardDAV server that is full of capabilities, but is more focused on an API style of configuration rather than a user interface. In consequence, there are a lot of things that I never took the time to master. But well, that user interface is kind of not great with Nextcloud either (I would say the most lacking one is an import feature that do not necessitate to use a client for that). Basic functions are easy to make work, but other things will necessitate to dive into it. For example, I couldn't make a shared calendar between users in a few minutes, so I created a new shared calendar specifically for that.

FreshRSS

FreshRSS, like Piwigo, feels a bit clunky and old. What I was using before was the RSS capabilities of Nextcloud, but it's not been working for more than a year, so I decided to use something else, focusing only on one purpose. It's of course, as its name suggests, a RSS reader for the browser. It's not the most elegant or intuitive tool, but it does what it is supposed to do once it's set up. You can organize by categories. I don't do much apart from this.

Bonus: did you know you can follow YouTube accounts and playlists through RSS? It's very convenient to not lose track of anything, and doesn't require you to have a Google account. Of course, you'll still be using the platform, but well... (you can of course also follow this blog!)

Linkding

Linkding is one of my favorite service to run. It's an open-source and self-hosted alternative to the likes of Pocket or Instapaper: a bookmarking tool, available anywhere. With browser extensions and an iOS app (though unofficial), a tagging system and read/unread system, I have nothing to complain about. There's nothing to dislike!

Conclusion

As you have read, I have quite a few services that I am only half satisfied with. But I'd like to reiterate that those are free and open-source software, and most of them are or were mostly done by people during their free time. I'm appreciative of the work, and I wouldn't be using them if I didn't like them or if I felt that they were not good enough for their purpose. And I would even less have written an article about them!

At first, managing my server was quite the work. As time passed, new tools appeared, and upon mastering them, the amount of work necessary dramatically reduced. I would say with a few days of Docker learning, it is quite accessible for anyone. It might not be the cheapest option, but I like to have the ownership of my data, and agency over the way I deliver them to the world.

All in all, even though I am not a professional system administrator, and have not done anything really related to that in my career, I feel that it is a valuable set of skills for software engineering. Docker is a powerful tool that has a very wide range of use. Being able to test development without having to mess with the host machine is very powerful and can be beneficial for an unthinkable amount of use cases. I would suggest anyone skeptical of Docker or containerization in general to make themselves a favor and give it a try!